Chronic Respiratory Diseases (CRDs) and Chronic Obstructive Pulmonary Disorders (COPD) affect millions of people around the globe, especially the population of Low to Middle Income countries (LMICs) such as Pakistan, and are the cause of millions of years lived with disability. World Health Organisation (WHO) notes that COPD accounts for the third leading cause of death worldwide with close to 3.29 million deaths in 2019 where Low to Middle-Income Countries are affected disproportionately. Respiratory tract diseases can greatly increase the Global Burden of Disease resulting in a significant amount of years of life lived with disability (YLD) and disability-adjusted life years (DALY). YLD due to COPD amounts to 118 million years while 84.4 age-standardised DALY per hundred thousand people result from Upper Respiratory Infections (URI) across all ages and sexes. These pulmonary disorders can have a significant economic burden as well.

As effective treatment stems from correct diagnosis, therefore, various techniques are used for imaging the chest cavity in a non-invasive manner. These include various imaging techniques such as Computed Tomography (CT), Magnetic Resonance Imaging (MRI), ultrasonography, and Positron Emission Tomography (PET). While this range of techniques allows for accurate assessment, the cost of these imaging methods can be prohibitive in many cases. Therefore, the use of X-rays for imaging provides an alternative that is not only cost-effective but has widespread availability as well. Chest X-rays (CXR) are the most commonly used imaging methodology in radiology to diagnose these pulmonary diseases with close to 2 billion CXRs taken every year. X-rays provide a black-and-white image of the lungs where abnormalities can be identified as opacities with other markers as well. Although CXRs are often used, their sheer volume can be a strain on the healthcare system and take a lot of radiologists’ time and resources. Keeping the above in view, the need for an automated system utilizing the CXR is imperative. Furthermore, merely providing an image-level diagnosis for a CXR is insufficient, as the disease affects multiple lung regions. This detailed information is crucial in assessing the severity and progression of the condition.

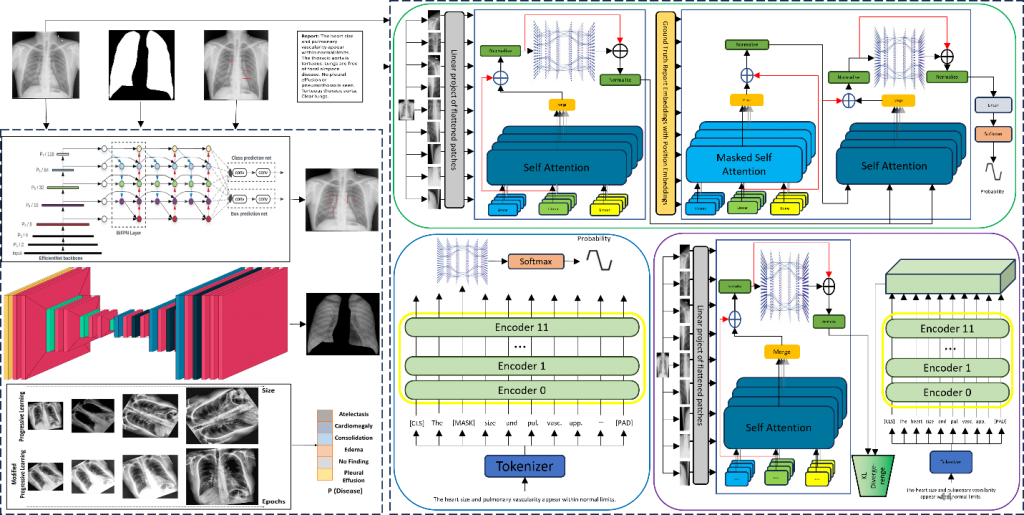

In this regard, this research offers a unified framework capable of disease classification, providing a severity score for a subset of lung diseases by segmenting the lungs into six regions, and producing chest X-ray reports while taking these challenges into consideration as shown in Figure 1.

The classification sub-module proposes a modified progressive learning technique in which the amount of augmentations at each step is capped. Additionally, an ensemble of 4 deep learning models is used to improve this sub-module’s performance and generalizability by taking advantage of different augmentation techniques. Furthermore, segmentation makes use of an attention map generated within and by the network itself. This attention mechanism allows to achieve segmentation results that are on par with networks having an order of magnitude or more parameters. Severity scoring is introduced for 4 thoracic diseases that can provide a single-digit score corresponding to the spread of opacity in different lung segments with the help of radiologists.

The report generation sub-module of the proposed framework generates a CXR report that provides the findings from a single CXR taken from frontal viewing position. An encoder and a decoder are employed in the report-generation module; the former splits the image into patches to create hidden states, while the latter uses the hidden encoded states to generate word probabilities, which are then used to build the final report. A foundation model is first fine-tuned in an unsupervised manner which is then used as the Teacher for knowledge transfer to a smaller Student model via Knowledge Distillation. KL divergence loss is employed for KD. The distilled student model is then used as the encoder in conjunction with a decoder for report generation.

This study leverages nine diverse chest X-ray (CXR) datasets, encompassing nearly 400,000 images from various demographics and locations, such as BRAX, Indiana, MIMIC, JSRT, Shenzhen, and SIIM. For BRAX segmentation, F1 scores of 0.924 and 0.939 (with fine-tuning) were achieved, with an 80.8% mean matching score for severity grading. The proposed modified progressive learning approach yields an average AUROC of 0.88 for classification, outperforming the literature by 9%. Incorporating Knowledge Distillation (KD) in report generation enhanced BLEU-1 scores by 4% and BERTScore by 7.5% for the Indiana dataset. When combined with pre-training on larger datasets, KD increased BLEU-1 by 7.2% and BERTScore by 3%. For the MIMIC dataset, our framework excelled when both Findings and Impression sections were considered. Notably, the research exhibited strong generalization on a locally gathered dataset, achieving BLEU-1 of 0.3827 and a BERTScore of 0.4392. Overall, this straightforward framework with a relatively small parameter size matches or surpasses existing techniques across various sub-modules.

Relevant Publications

- Vision transformer and language model based radiology report generationMM Mohsan, MU Akram, G Rasool, NS Alghamdi… – IEEE Access, 2022

- Attention based automated radiology report generation using CNN and LSTMM Sirshar, MFK Paracha, MU Akram, NS Alghamdi… – Plos one, 2022